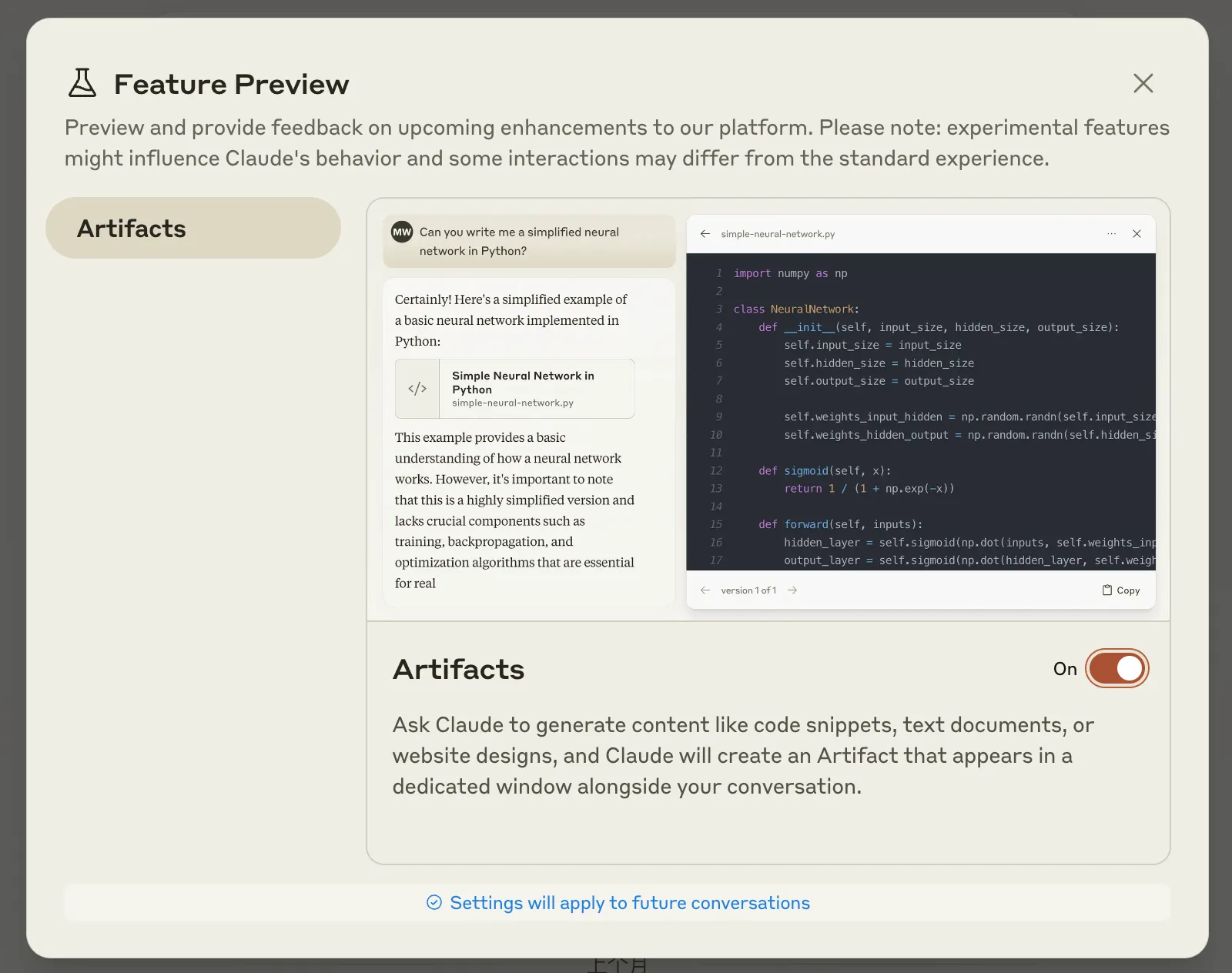

Creating Games Using Free Claude3.5 with Artifacts

0 CommentAnthropic has quietly released the Claude 3.5 model, which not only improves model performance but also supports Artifacts. After initial experience, I feel this is what the future of AI should look like, even more impressive than the previous GPT4-o.

Coding in the Artifacts workspace

Coding in the Artifacts workspace

Before Artifacts, if you wanted to use ChatGPT or other LLMs to help implement program functionality, you needed to first provide the functional requirements, then copy the AI’s code into your own environment to run. If it didn’t meet expectations, you had to ask again, modify the code, and run it again. This process needed to be repeated until satisfaction or giving up (some complex code is still not well written by AI currently).

Now with Artifacts, after providing specific functional requirements, Claude creates a workspace, where AI can view and edit code in real-time during the subsequent dialogue process. This way, Claude has transformed from an ordinary conversational generation AI into a collaborative work environment. Perhaps in the near future, we can incorporate Claude into our own projects, allowing it to participate in development like a regular team member.

The vision is certainly beautiful, but Artifacts is still a beta feature at present. How does it perform? Let’s try and see.

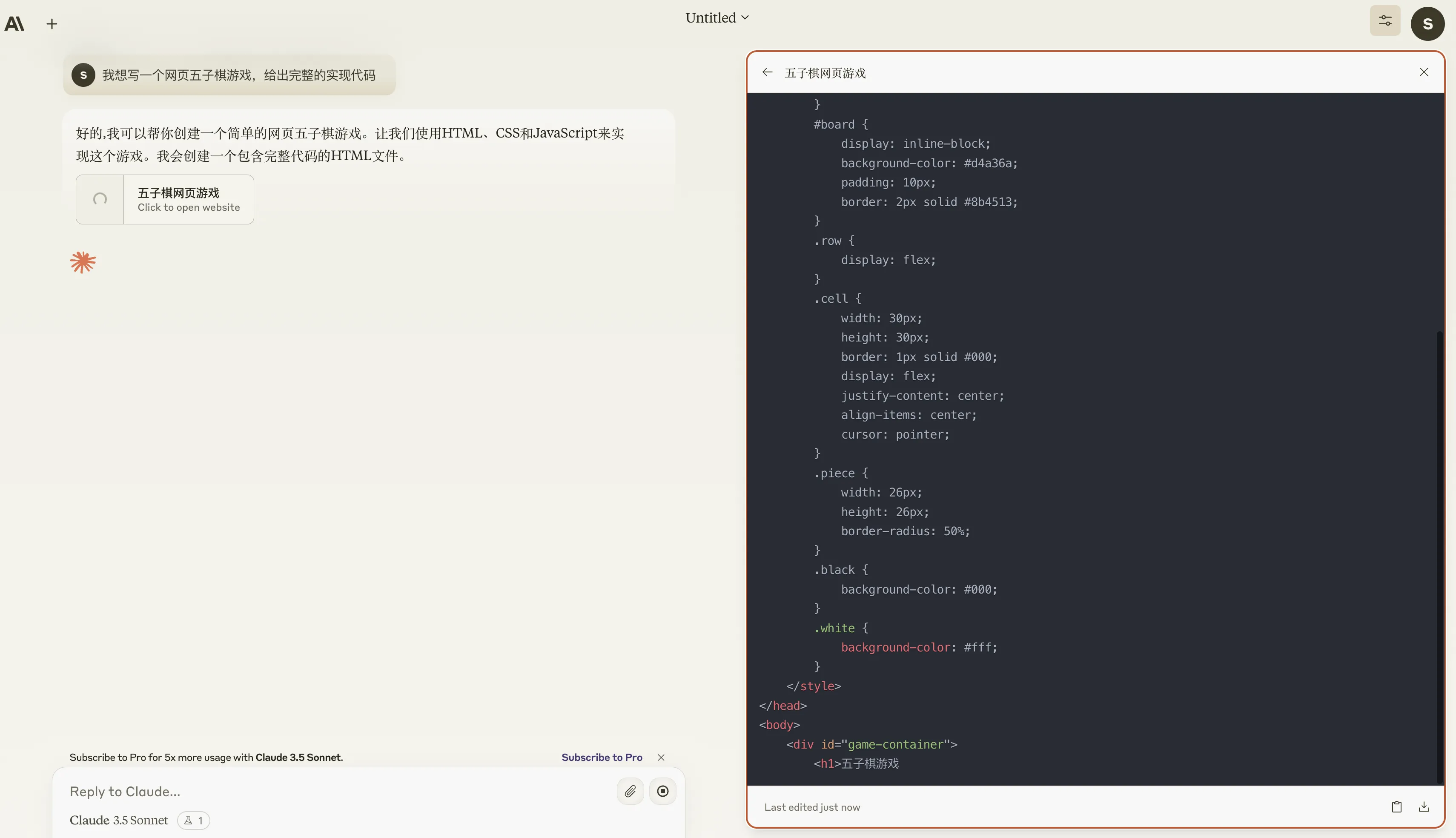

AI Implementation of Gomoku Game

First, I tried to let Claude implement a web version of the Gomoku game. My prompt was also very simple:

I want to write a web Gomoku game, please provide the complete implementation code

Then, I saw the Artifacts workspace, which already had a code file and was automatically generating code.

Artifacts generating Gomoku code file

Artifacts generating Gomoku code file

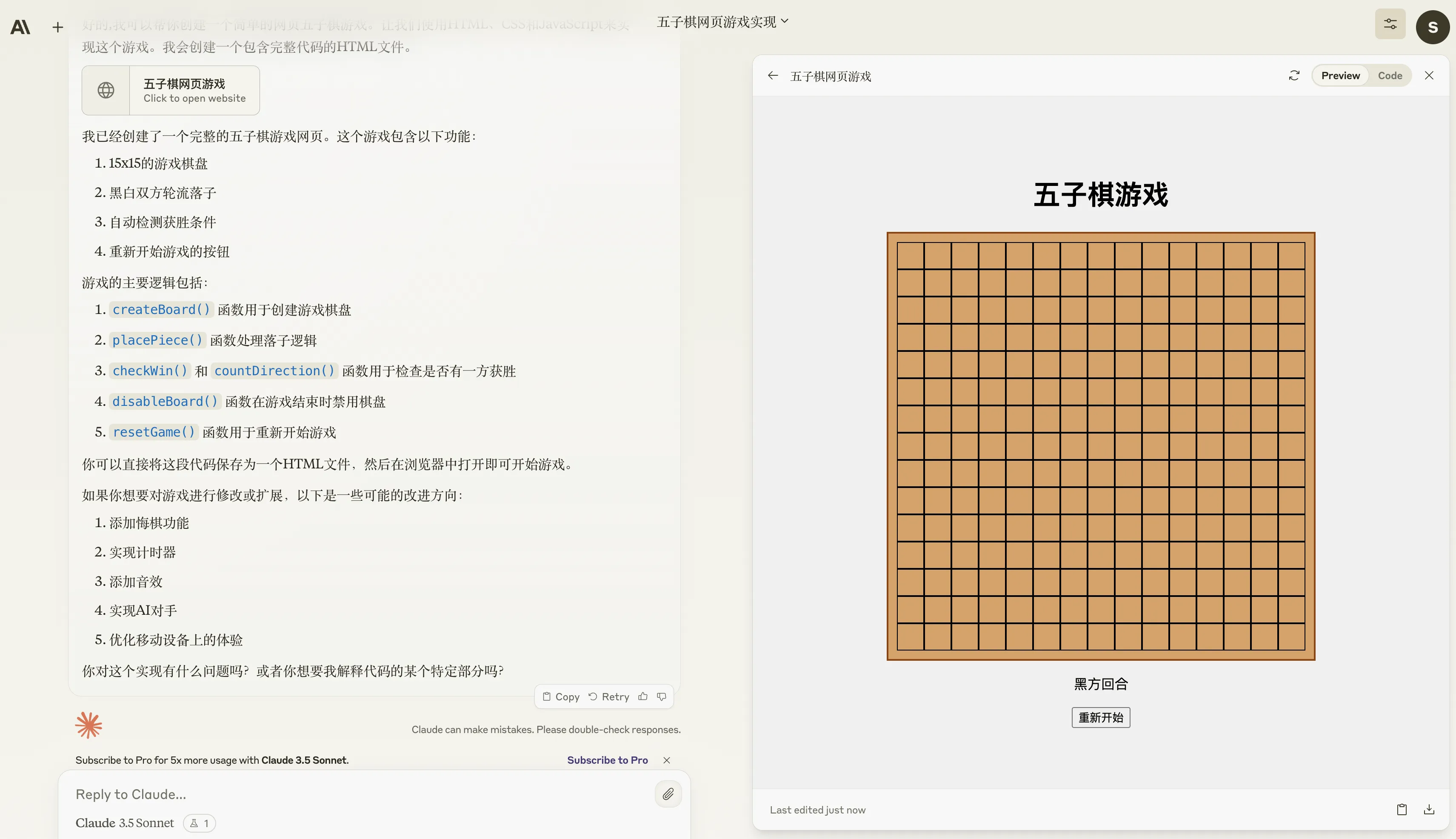

After the code was generated, it directly jumped to a preview page where I could see the Gomoku interface. I originally thought this preview page was static, but surprisingly, I could interact on this page. Clicking on positions on the board would alternately place black and white pieces.

Artifacts generated Gomoku interface preview

Artifacts generated Gomoku interface preview

Since it could place pieces, I wondered if it had implemented the rules of Gomoku. So I played a bit and found that AI had indeed implemented the rules of Gomoku, and even had win/loss judgment.

This completely exceeded expectations!!! With just one sentence, really just one sentence, AI implemented a fairly complete Gomoku game, with a beautiful interface, rule judgment, and win/loss determination. It’s already completely playable.

The key is that AI also gave some suggestions for further improving the game, such as:

- Adding an undo function

- Implementing a timer

- Adding sound effects

- Implementing an AI opponent

- Optimizing the experience on mobile devices

It feels like this is not an AI, but a flesh-and-blood, opinionated, experienced front-end engineer.

Gomoku Game Demo

You can experience the AI-written Gomoku game at the demo address in the blog post.

Black's turn

After showing the Gomoku effect generated by Claude3.5 to a friend, they were a bit skeptical about where AI got the code from. After all, Gomoku implementations are everywhere on the internet, and AI might have learned someone else’s code and used it. This makes sense, so we can only upgrade the difficulty to see how Claude3.5 performs.

AI Implementation of Tetris

Next, let’s try a more complex game, Tetris. Also starting with a simple prompt, AI generated an initial Python version. To run it directly in the blog, I asked it to change to a web version. The first version generated, when embedded in the blog, found that the border was gone, and when using the up and down keys to switch shapes, the webpage would scroll along. So I asked Claude to solve this problem, and unexpectedly, it gave perfect code in one go.

As you can see from the image below, after re-prompting, Claude provided a second version of the file, and then the preview page was a complete Tetris game, controllable by keyboard to move and rotate blocks.

Artifacts generated Tetris interface preview

Artifacts generated Tetris interface preview

Here, through continuous dialogue prompts, some functions were modified, and by the 5th version, we got a very good functional prototype. Throughout the conversation process, Claude3.5’s understanding ability was quite good, not very different from GPT4, and the final implementation effect also exceeded expectations.

Tetris Demo

I’ve directly embedded the AI-generated code into the blog, and you can experience the Tetris game in the demo below.

Your score is:

Judging from the continuous modification process of Tetris here, AI’s understanding ability and coding skills are quite strong, probably on par with GPT4.

Everyone Using AI to Write Programs

From the simple games written above, Anthropic’s performance is quite impressive. In fact, I also tried to use it to do some algorithm Web visualizations, and Claude3.5 provided very good examples. For instance, I asked it to implement a token bucket rate limiting algorithm, where you can set the token bucket capacity and rate, as well as the token consumption speed, and then have it draw a request monitoring curve.

After setting the parameters each time, it would dynamically generate a monitoring curve. Then you could pause at any time, reset the parameters, and continue to generate new monitoring curves. After setting a few parameters and running, I got the following result:

Artifacts generated token bucket rate limiting algorithm interface preview

Artifacts generated token bucket rate limiting algorithm interface preview

For people without front-end development experience, being able to quickly implement a visualized algorithm demonstration is truly magical. With Anthropic’s help, everyone can break out of their circle, no longer limited by their own technical experience, and quickly implement some of their ideas.

Especially for people who want to learn programming, in the past, you basically had to first study books, learn skills, and then gradually apply them to projects. Now you can have a conversation with AI with questions, let AI help implement, and if you have any problems in between, you can communicate with AI at any time. This learning process is more interesting. After all, if you ask me to first learn a bunch of front-end syntax and then stumble to implement a Tetris game, I would give up very quickly. But if I can generate a complete, runnable game with just one sentence, and then discuss the implementation process and code details with AI, it would be much more interesting and give a greater sense of achievement.

Anthropic’s Shortcomings

Of course, the current Anthropic is only a beta version and still has many shortcomings. The article title mentions using free Claude3.5, but there are quite a few limitations in the free version. First, there’s a limit on conversation length. If the content is a bit long, adding attachments will prompt:

Your message will exceed the length limit for this chat. Try attaching fewer or smaller files or starting a new conversation. Or consider upgrading to Claude Pro.

Even without attachments, too many conversation turns will prompt:

Your message will exceed the length limit for this chat. Try shortening your message or starting a new conversation. Or consider upgrading to Claude Pro.

Moreover, the free version has a limit on the number of messages that can be sent per hour, which is easily triggered. However, these are all minor issues that can be solved by upgrading to Pro. In my opinion, Anthropic’s biggest flaw currently is that the workspace functionality is too limited, still quite far from being truly practical.

Currently (as of 2024.06.22), the code in the workspace can only be generated by AI, it cannot be edited by yourself, nor can you upload your own code. If you want to make some simple modifications based on AI code, it’s very difficult. Additionally, in each conversation, the content generated in the workspace is all put together, you can’t generate multiple files like in a file system.

For example, if I want to use Python to implement a web backend service, using the FastAPI framework, implementing APIs with authentication functionality, and requiring convenient Docker deployment. Claude3.5 only generated one file in the workspace, and put main.py, Dockerfile, requirements.txt, and other content in it. If I want to use it, I still need to create folders locally, copy the contents into different files separately, and then initialize the environment to run.

If Anthropic’s workspace could create folders and then put the entire project’s code in it, and provide a function to download the entire project, it would be much more convenient. Going even further, Anthropic could provide a Python runtime environment, allowing users to run and debug code directly in the workspace. In this way, Anthropic could become a true collaborative work environment, not just a code generation tool.

Ideally, the future Anthropic should be able to generate and manage multiple folders or files in the workspace, allow us to manually modify parts of the code, and debug code in the workspace. Then Anthropic can discover problems during debugging and fix them. In other words, AI can understand the entire project code and participate in every process of development together with humans.

Perhaps such an Anthropic will be born soon.