ChatGPT Teacher Teaches Me Writing a Tampermonkey Script

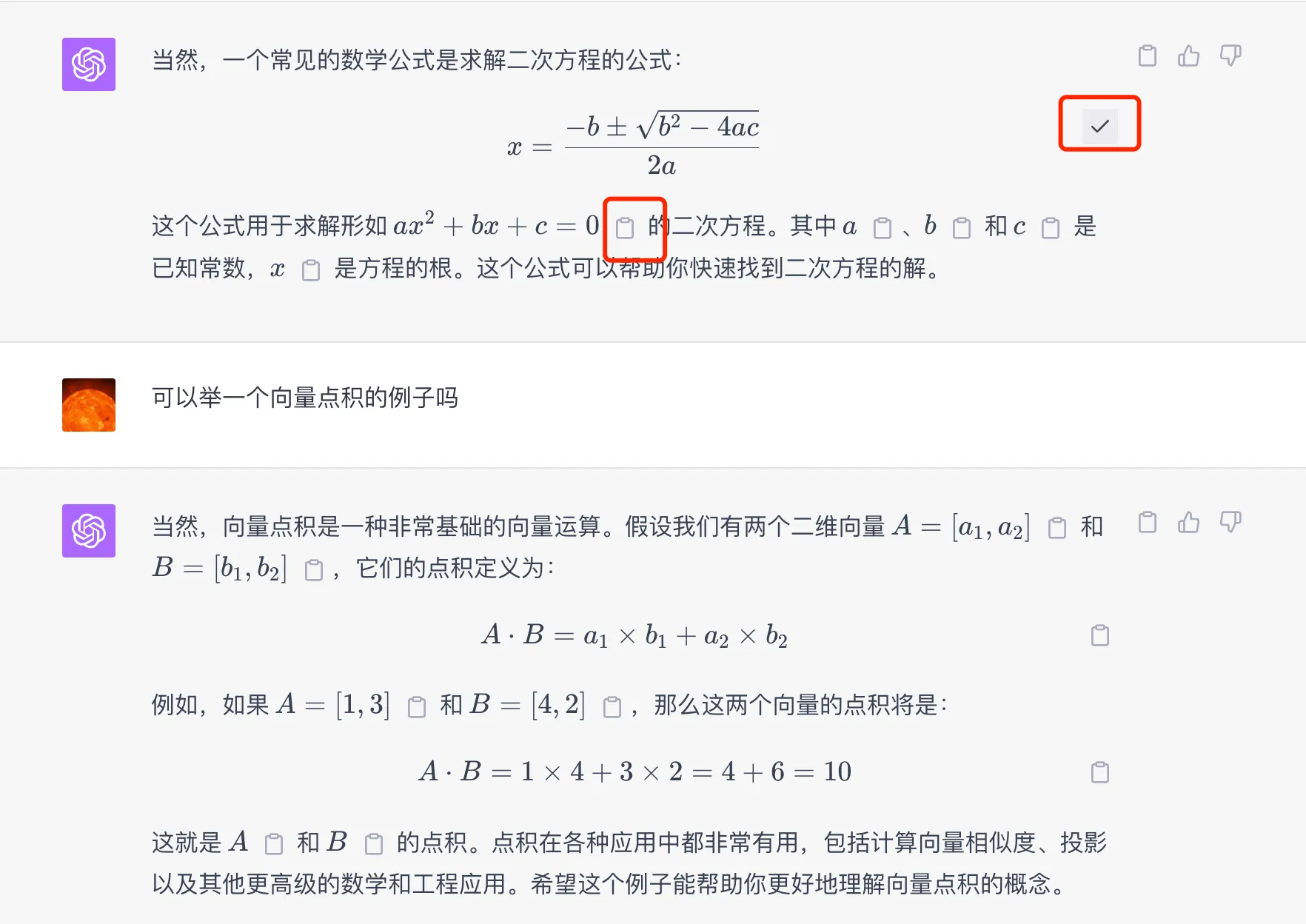

0 CommentLearning frontend with ChatGPT as a teacher? Sounds a bit incredible, after all, frontend has a lot to do with UI, and communicating frontend with ChatGPT, which doesn’t have multimodal capabilities, seems difficult just thinking about it. However, recently, with the help of ChatGPT, I quickly wrote a Tampermonkey plugin that can copy the Latex text of mathematical formulas on ChatGPT’s chat interface.

As a backend developer with zero frontend experience, writing a Tampermonkey plugin would first require finding documentation and spending a lot of time just to write a prototype without ChatGPT. If problems were encountered in the middle, one would have to find answers on their own, which could be a very long process and might lead to giving up halfway. But with ChatGPT as a teacher, you can directly ask questions when encountering problems, making the entire development experience so much better.

Once again, I marvel at how ChatGPT really greatly expands personal technical capabilities and greatly improves personal problem-solving abilities!