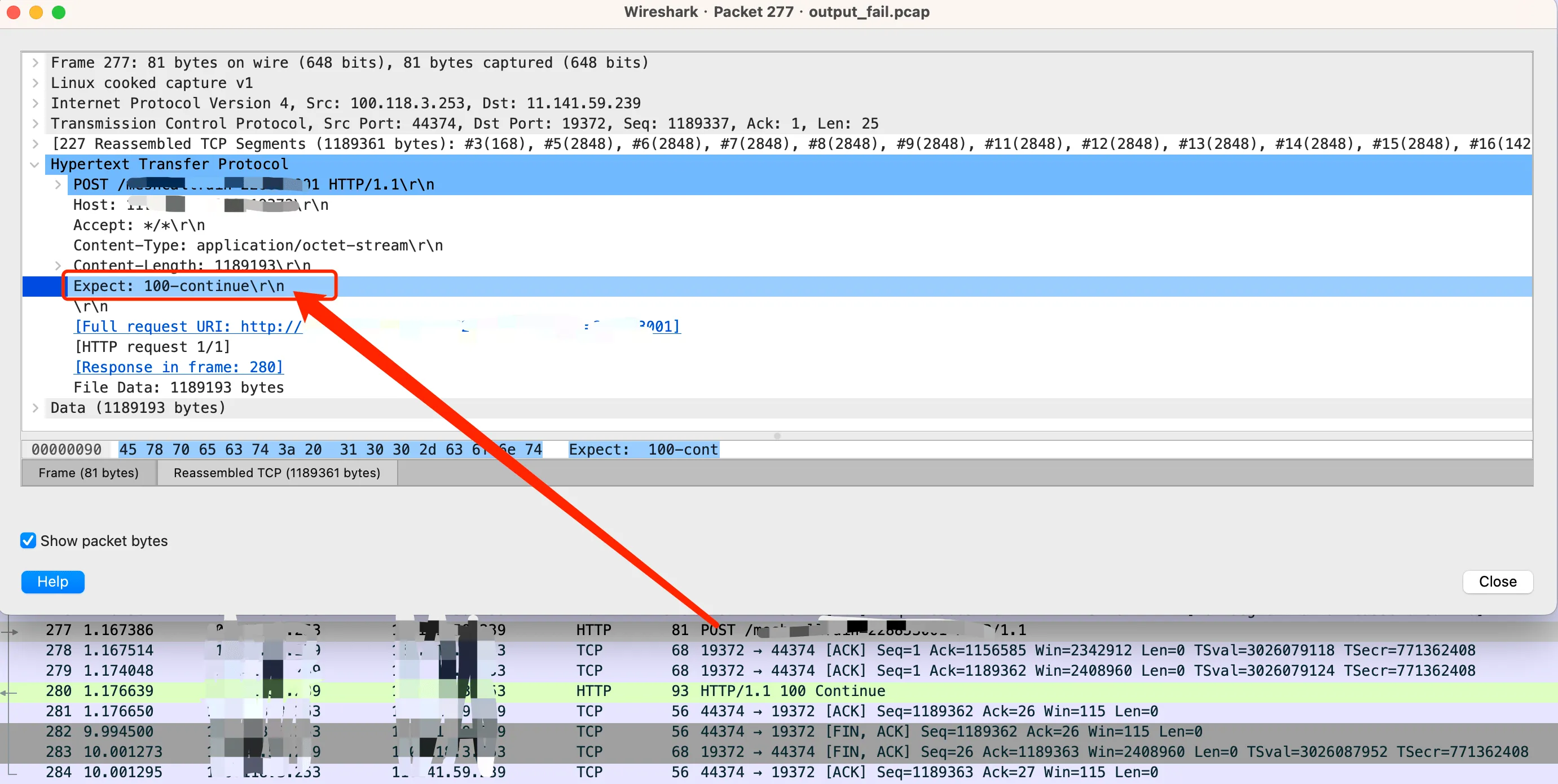

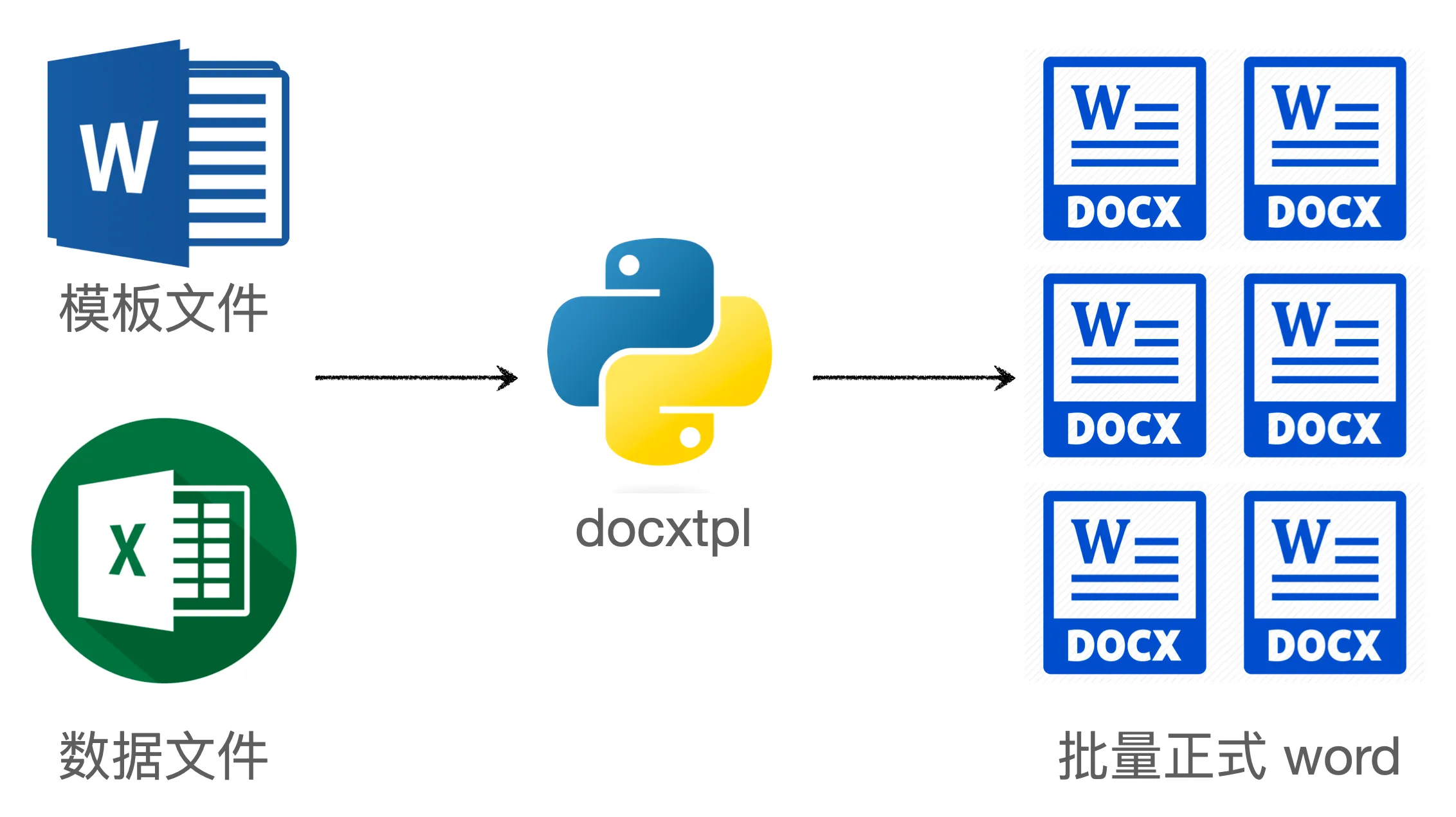

Using Python Template Library docxtpl to Batch Create Word Documents

0 CommentIn work, repetitive labor is often tedious. Leveraging the power of Python to optimize workflows can greatly improve efficiency and achieve twice the result with half the effort. This article will detail how to use the Python template library docxtpl to automatically generate multiple Word documents in a very short time, saving a lot of manual operation time, thus freeing up your hands and easily completing tasks.

Python docxtpl batch creation of Word files

Python docxtpl batch creation of Word files

Update: It’s a bit difficult for beginners to do it with Python. So I wrote an online tool that supports batch generation of Word documents from templates and data. Online Batch Generate Word Tool Address