Dive into C++ Object Memory Layout with Examples

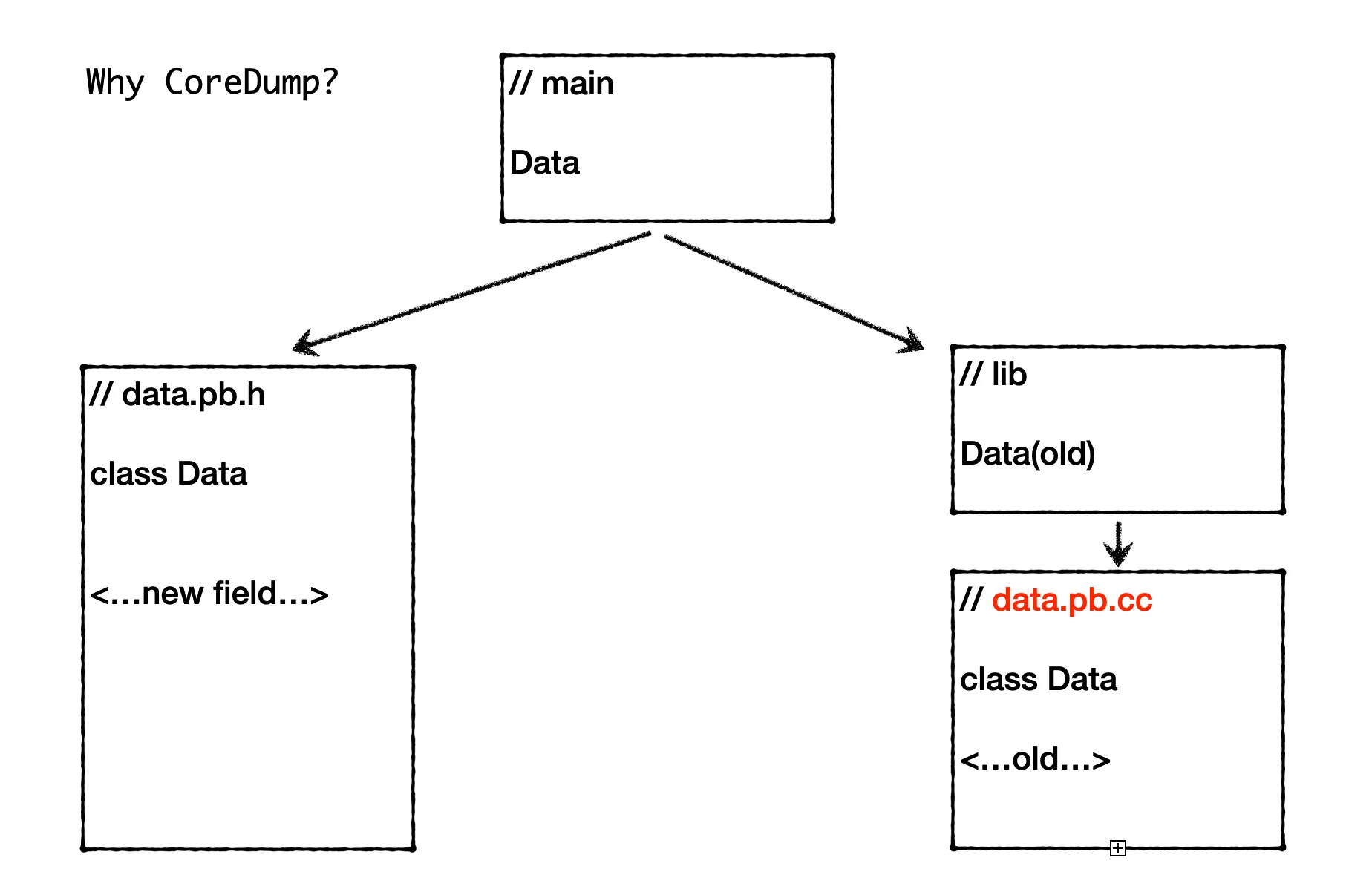

0 CommentIn the previous article Analysis of C++ Process Coredump Problem Caused by Missing Bazel Dependencies, due to the use of different versions of proto objects in the binary, inconsistent object memory layouts led to memory address chaos when reading and writing members, ultimately causing the process to crash. However, we didn’t delve into the following questions at that time:

- How are objects laid out in memory?

- How do member methods obtain the addresses of member variables?

These actually involve the C++ object model. The book “Inside the C++ Object Model” comprehensively discusses this issue and is well worth reading. However, this book is not easy to read, and some content can be difficult to fully understand without practice even after reading. This article attempts to start from practical examples to help everyone gain an intuitive understanding of C++ class member variables and functions in memory layout, which will make it easier to understand this book later.